As we all know, data is critical to the success of a business. Well… at least quality, accessible data can have a huge impact…

Quality data can help us identify leading indicators for key business metrics. It can help us replace subpar proxies. It can even lead to notable increases in revenue.

However, to create quality data that is truly useful, we must first prepare the data. The goal in preparing the data is to find and address common data issues. For this, we use a technique called data exploration.

Data exploration is the first step in the data analysis process. It involves finding patterns and characteristics in the data that help a data scientist form a baseline before doing a deep dive into the data.

So let’s take a deeper dive ourselves into what data exploration is...

The Starting Point of Your Business Data

Most of the information that you’ll be analyzing is stored in a tabular format, or in columns and rows, such as a spreadsheet.

Though you may have some other data types that aren’t in that form. Other data types can be less structured, like texts, images and speeches. These types of data will require different kinds of exploration.

Tabular data can be easier to read and understand, as it's already categorized in some ways. This is especially true when the basics of the data are summarized and described.

We can use tabular data to do things such as mapping and visualization of relationships between two attributes. Once we perform these, we can move on to data management tasks.

But it’s the data exploration step, which extracts useful insights from the raw data, that contributes to the success in machine learning modeling. This modeling allows for decision making.

Given that, we will focus on why we do data exploration, and the impacts of it on model predictions.

So why do we need data exploration in ML?

To get deeper information from our machine learning, we first need to identify data imperfections.

Whether a data set is large or small, there is almost always some data imperfections that need to be accounted for or closely examined first.

Once this is done, we can look for attributes that have an influence on the model output. We can also decide which model is most suitable or ideal.

Critical steps in the data exploration journey

We often repeat three key steps in the data exploration journey. Each step is critical to account for common errors or issues in the data. Let’s review each step.

Step #1: Find missing values

The problem of missing data frequently occurs in datasets.

These missing pieces can occur at random or can be systematically excluded.

It isn't always the end of the world in larger data sets because there are usually plenty of additional data points to analyze. This means the missing rate is usually low enough to not be a problem.

However, with smaller data sets missing data pieces can cause big issues. They can reduce the fit of the model, causing it to have a biased result. This renders the predictions and classifications far less useful.

We can solve for missing values by making a guess based on other data points. Or we can remove data points that are related to the missing ones.

It depends on how much data is missing. This choice is up to the data scientist once they’ve considered other factors, such as how much data is missing and where in the data set it is occurring.

Step #2: Account for data sparsity

As opposed to missing values, sparsity indicates that you have the data points you need. However, a lot of them are zeroes!

Zeroes aren't typically thought of as a great sign...

Sparsity can illustrate how important (or unimportant) a feature is to the user.

When sparse features are viewed alongside other more dense attributes in the model, we can run into issues of overfitting. This can lead to underestimating their importance.

Even if a feature appears as "sparse" in a dataset, it may still have some predictive power.

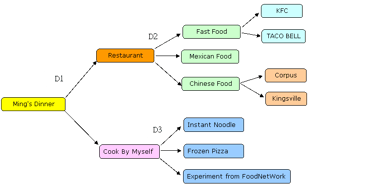

Many of us have used a decision tree model, like this:

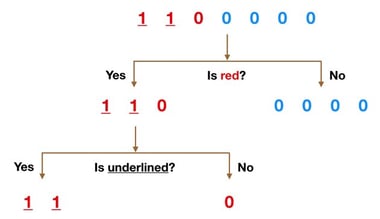

But when we're using them in data science, they look more like this:

These trees make up random forest models. When there are too many zeroes occurring in them, it can cause the model to overpredict features that are denser than others in the group.

Thus, we think something is more or less likely to happen because of the zeroes. But that's not actually correct.

To solve for sparse data, we can remove the feature with the sparsity. Or we can separate out the zeroes and examine them separately from the denser data. This enables us to adjust the model to account for sparsity.

Step #3: Manage Outliers

Outliers are observations that are very large or small compared to the other observations in a data set.

They can skew your averages and have an impact on experiments and results.

Outliers can happen in any data set. They can happen because of reporting or data processing errors (human), or one-off events that occurs.

For example, we often see SaaS companies with a larger than usual annual recurring revenue (ARR) on a yearly product renewal simply because they have a small customer base.

Any one-off outlier needs to be researched before it's inputted into a data model.

Sometimes it’s not right to use the outlier in the data model. However, often just by looking at it more deeply, we learn more about a certain space and how to build better models in the future.

Now we can Automate Feature Engineering in our AI Model

Now that we’ve solved for the common data issues, we can start the process of building our artificial intelligence model and get to know our data even deeper.

Doing the preliminary data exploration and gaining a full understanding of the components is a critical step in machine modeling. Feeding your machine learning model accurate data ensures that what you get from it is actually usable.

We can also do automated feature engineering now. This is a process of automatically constructing new features from the data, and then creating robust predictions in the model.

Feature engineering can be an arduous process, but because we completed data exploration, it can be automated!

Thinking about your Data Strategy with Tingono

Now that you have a better understanding of why data exploration is important, you can understand why we take it so seriously here at Tingono.

Our team of data scientists is working with huge amounts of business data from varying sources, different sizes, and with different goals. Data exploration gets us all on the same page, and allows us to customize solutions.

If you want to learn more about how Tingono is utilizing business data for growth and retention, drop us a line!